AI systems are able to describe what is going on in an image. They generate what are called captions that describe the content of images. For example, a boy skating wearing a helmet: This could be a simple caption extracted from a picture.

These systems learn the caption generation task with thousands and thousands of images paired with captions written by humans. After being trained with these pairs, the AI system is expected to generate a text that describes the content of a new image.

One of the most well-known datasets with image-caption pairs is the Coco dataset. This dataset is one of the most important resources to train the AI system because it contains 200K+ annotated images. The captions were written by humans instructed to strictly describe what is going on in the pictures, with no extra information- for example, the name of the people depicted- with length limitations that forced them to reduce distracting information. These are in a way canonical captions.

In the description of cultural heritage images such as paintings one may wonder if the system can be trained with the Coco dataset pairs. In case the system was trained with these pairs, one important issue must be taken into account: captions describe present-day and real-life scenes. Therefore, a system trained with these pairs would fail to describe paintings that depict objects with symbolic meaning, non-existent entities like angels or dragons, and scenes that are not depicted in these datasets such as crucifixions, annunciations and killings. Besides, the system would not be aware of anachronisms and generate a caption like a person on a bike for a painting of Saint George killing the dragon. People with helmets on bikes are more represented in the Coco dataset than knights wearing helmets riding horses. In the TimeMatrix Webinar that took place in September last year, we explained how the problem of anachronisms are tackled within the Saint George on a Bike project.

Texts accompanying images of paintings in digital art collections are expected to be good for training a caption generator for paintings. However, it is very difficult to find canonical captions in art collections. There are important aspects that make texts accompanying images in art collections not to be canonical. Figure 1 shows the painting Jacob’s Dream and the text accompanying it. Notice that the sentences referring to the content of the image (in bold) are surrounded by sentences referring to things, people, places that are not depicted in the painting but refer to extraneous items such as the painter’s style, and his artistic motivations.

Figure 1. Jacob’s dream

This painting tells of Jacob the Patriarch´s mysterious dream, as told in Genesis. He appears asleep, lying on his left shoulder with a tree behind him. On the other side is the ladder of light, by which the angels ascend and descend. This subject demonstrates Ribera´s skill at constructing metaphoric discourse. He uses the image of a shepherd resting in the countryside to describe one of the best-known Bible stories.

Figure 2. Fragment of the text accompanying the painting Jacob’s dream

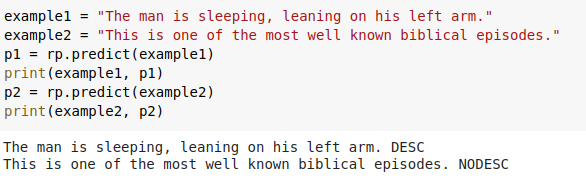

In order to parallelise the image with texts that refer to its contents a descriptive text classifier has been performed. Figure 2 shows an example of how the classifier distinguishes the sentences with extraneous information (NODESC) from the ones that refer to the content of the painting (DESC).

Figure 3. Example of DESC/NODESC classification by the descriptive text classifier

Another important issue is the fact that descriptive texts in art collections are full of proper nouns (e.g. Jesus, Mary, Moses, Saint George, Bacchus, etc.). A descriptive sentence like Jesus carrying the Cross is not canonical in the Coco dataset sense of canonical because, as explained before, Coco canonical captions cannot contain the names of the people depicted. So in order for the classifier to classify Christ carrying the cross as descriptive, Christ- such as other proper nouns- is replaced with the Coco dataset reference a person. Figure 3 shows how the replacement of Christ with a person makes the classifier label the sentence as descriptive. The words in green are the ones that contribute the most to the classification.

Notice how Christ contributes to the NODESC classification whereas the contribution of a person in the DESC classification is remarked with a darker green.

Figure 4. Example of change in the classification when the proper noun is replaced with person

Other replacements are the substitution of words stylistically typical of painting collections (e.g: vessel, vestment) with a Coco synonym (e.g: ship or boat instead of vessel, dress instead of vestment) or words not found in the Coco dataset referring to a person (e.g: saint) which are replaced with the Coco hypernym a person.

This procedure has been evaluated by classifying sentences in the Europeana collection. The classifier improved significantly when the procedure was performed, reaching a high value of quality in contrast to the results if the procedure was not performed.

In conclusion, Saint George on a Bike project is highly concerned in obtaining the data necessary for AI to understand cultural heritage images. There is still some way to go in providing image-captions pairs but we will continue working to finally attain this goal.